Active Learning for Accelerated Interactive Physical Facial Performance Design

Abstract

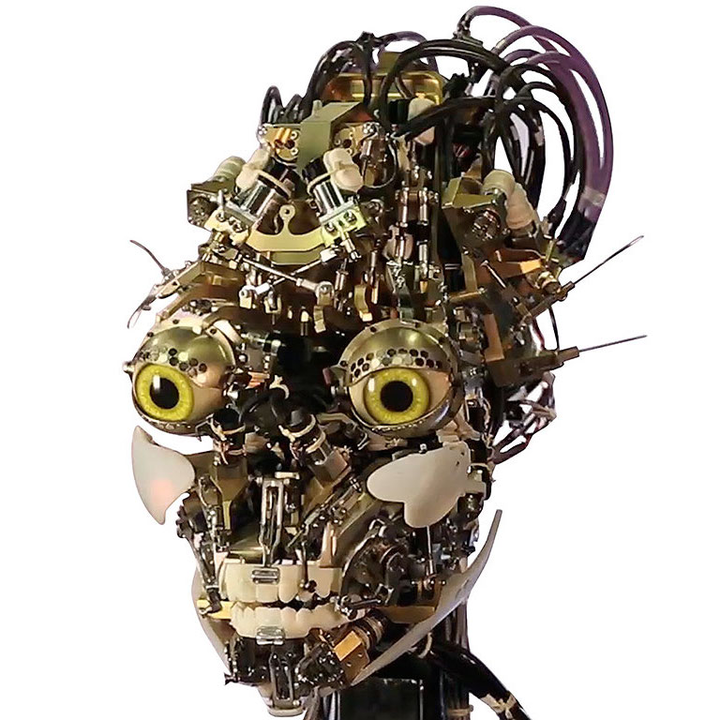

We present a practical neural computational approach for interactive design of animatronic facial performances. An offline quasi-static reference simulation accurately predicts hyperelastic skin deformations, driven by a coupled mechanical assembly. To achieve interactive digital pose design, we train a shallow fully connected neural network (KSNN) on input motor activations to solve the simulated mesh vertex positions. Our fully automatic synthetic training algorithm enables a first-of-its-kind Active Learning framework (GEN-LAL) for generative modeling of facial pose simulations. With adaptive selection we significantly reduce training time to within half that of the unmodified training approach for each new animatronic figure.

Type

Publication

The Journal of Computer Graphics Techniques (JCGT)